Research at scale

Search that understands your research

Find exactly what you need across millions of papers, with AI that understands context and relevance

Comprehensive research in minutes, not weeks

AI-powered discovery that searches across databases, analyzes thousands of papers, and extracts the insights you need

Write with confidence, cite with precision

Every claim automatically linked to source material. No more manual citation hunting or formatting headaches.

Nguyen et al., 2024 - GLP-1 RAs & MACE outcomes

Singer et al., 2016 - Sepsis-3 definitions

Designed around how researchers actually work

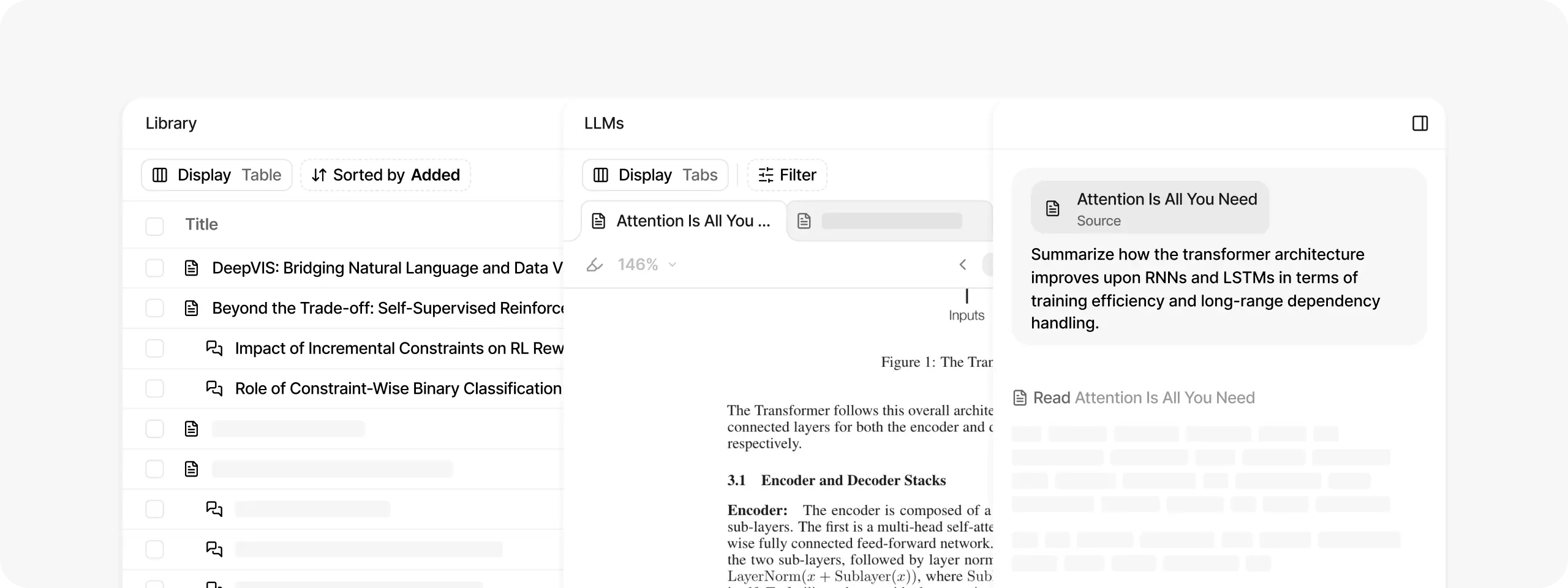

Traceable to the source

Every answer links directly to its origin, letting you verify claims in one click

Grounded in your documents

Responses draw only from your uploaded materials without fabricated information

Citations on autopilot

Relevant references surface automatically as you write, matched to your content

Everything you need in one integrated platform

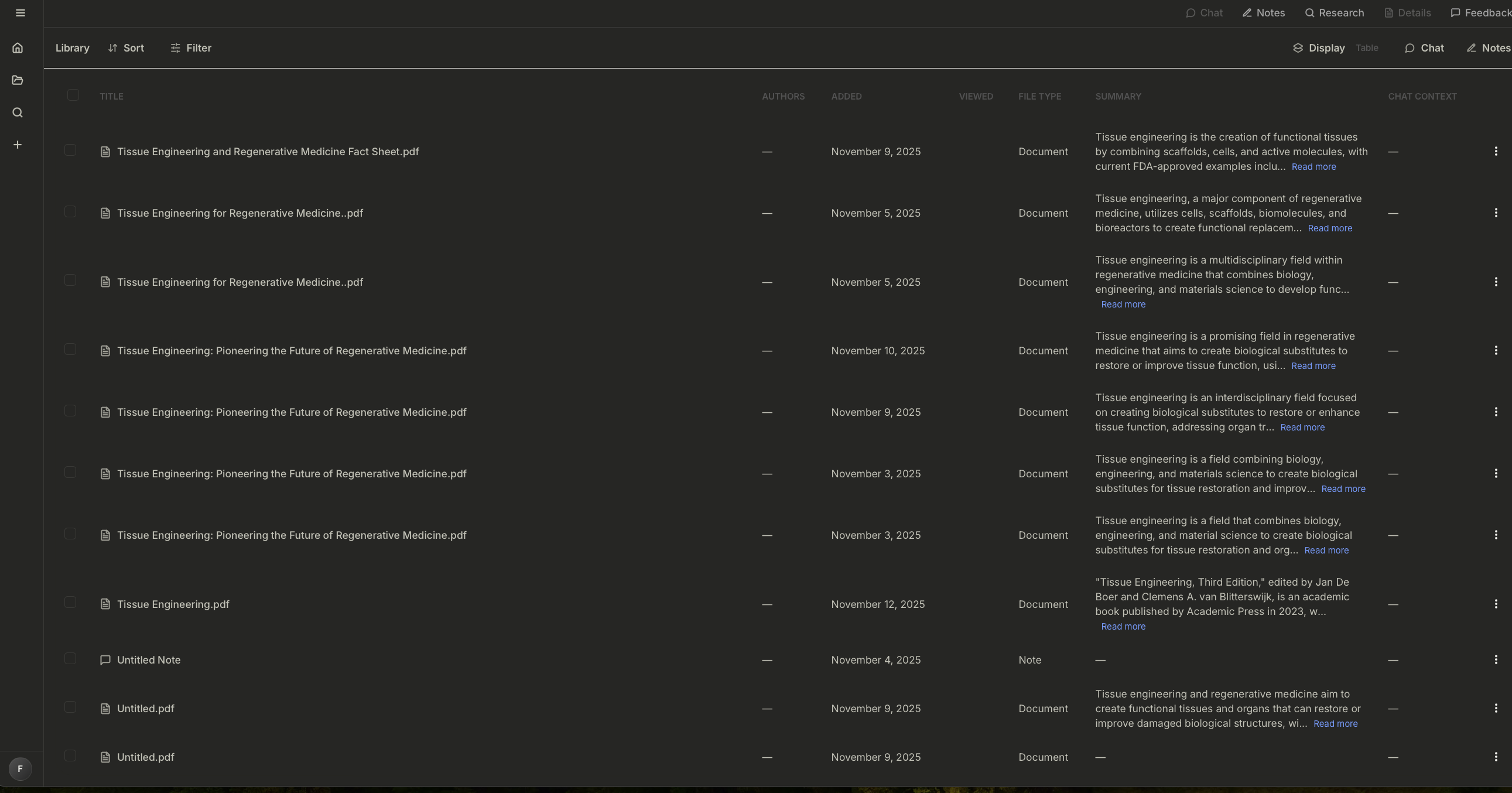

From research collection to final draft, Serenoty connects your entire workflow with seamless integrations

Organize papers, notes, and citations in customizable folders with powerful search and filtering

Seamlessly connect with Google Drive, Dropbox, OneDrive, and your favorite cloud storage platforms

Export to Notion, Overleaf, Zotero, Canvas, Blackboard, and Benchling with one click

All your changes sync automatically across devices and integrated platforms

Read PDFs, highlight key findings, and add notes directly within your workspace

Auto-import from PubMed, arXiv, and Scholar with smart categorization and tagging